Part 5: ROS Actions

Introduction¶

Exercises: 4 essential (plus 2 advanced exercises)

Estimated Completion Time: 3 hours (for the essential exercises only)

Aims¶

In this part of the course you will learn about a third (and final) communication method available within ROS: Actions. Actions are essentially an advanced version of ROS Services, and you will learn about exactly how these two differ and why you might choose to employ an action over a service for certain robotic tasks.

Intended Learning Outcomes¶

By the end of this session you will be able to:

- Recognise how ROS Actions differ from ROS Services and explain where this method might be useful in robot applications.

- Explain the structure of Action messages and identify the relevant information within them, enabling you to build Action Servers and Clients.

- Implement Python Action Client nodes that utilise concurrency and preemption.

- Develop Action Server & Client nodes that could be used as the basis for a robotic search strategy.

Quick Links¶

- Exercise 1: Launching an Action Server and calling it from the command-line

- Exercise 2: Building a Python Action Client Node with concurrency

- Exercise 3: Building a Preemptive Python Action Client Node

- Exercise 4: Developing an "Obstacle Avoidance" behaviour using an Action Server

- Advanced (optional) exercises:

Additional Resources¶

Getting Started¶

Step 1: Launch your ROS Environment

Launch your ROS environment now so that you have access to a Linux terminal instance (aka TERMINAL 1).

Step 2: Restore your work (WSL-ROS Managed Desktop Users ONLY)

Remember that any work that you do within the WSL-ROS Environment will not be preserved between sessions or across different University computers, and so you should be backing up your work to your U:\ drive regularly. When prompted (on first launch of WSL-ROS in TERMINAL 1) enter Y to restore this1.

Step 3: Launch VS Code

WSL users remember to check for this.

Step 4: Make Sure The Course Repo is Up-To-Date

Check that the Course Repo is up-to-date before you start on these exercises. See here for how to install and/or update.

Calling an Action Server¶

Before we talk about what actions actually are, we're going to dive straight in and see one in action (excuse the pun). As you may remember from Part 3, you actually used a ROS Action to make your robot navigate autonomously in Exercise 3, by calling an action server from the command-line. We will do a similar thing now, in a different context, and this time we'll also look at what's going on in a bit more detail.

Exercise 1: Launching an Action Server and calling it from the command-line¶

We'll play a little game here. We're going to launch our TurtleBot3 Waffle in a mystery environment now, and we're going to do this by launching Gazebo headless i.e. Gazebo will be running behind the scenes, but there'll be no Graphical User Interface (GUI) to show us what the environment actually looks like. Then, we'll use an action server to make our robot scan the environment and take pictures for us, to reveal its surroundings!

-

To launch our TurtleBot3 Waffle in the mystery environment, use the following

roslaunchcommand:

TERMINAL 1:

Messages in the terminal should indicate that something has happened, but that's about all you will see!

-

Next, open up a new terminal window (i.e. not a new tab in the same window). Windows Terminal users can do this by pressing the "New Tab" () button whilst pressing the Shift key (we'll call this WT(B)).

-

In WT(B) have a look at all the topics that are currently active on the ROS network (you should know exactly how to do this by now!)

-

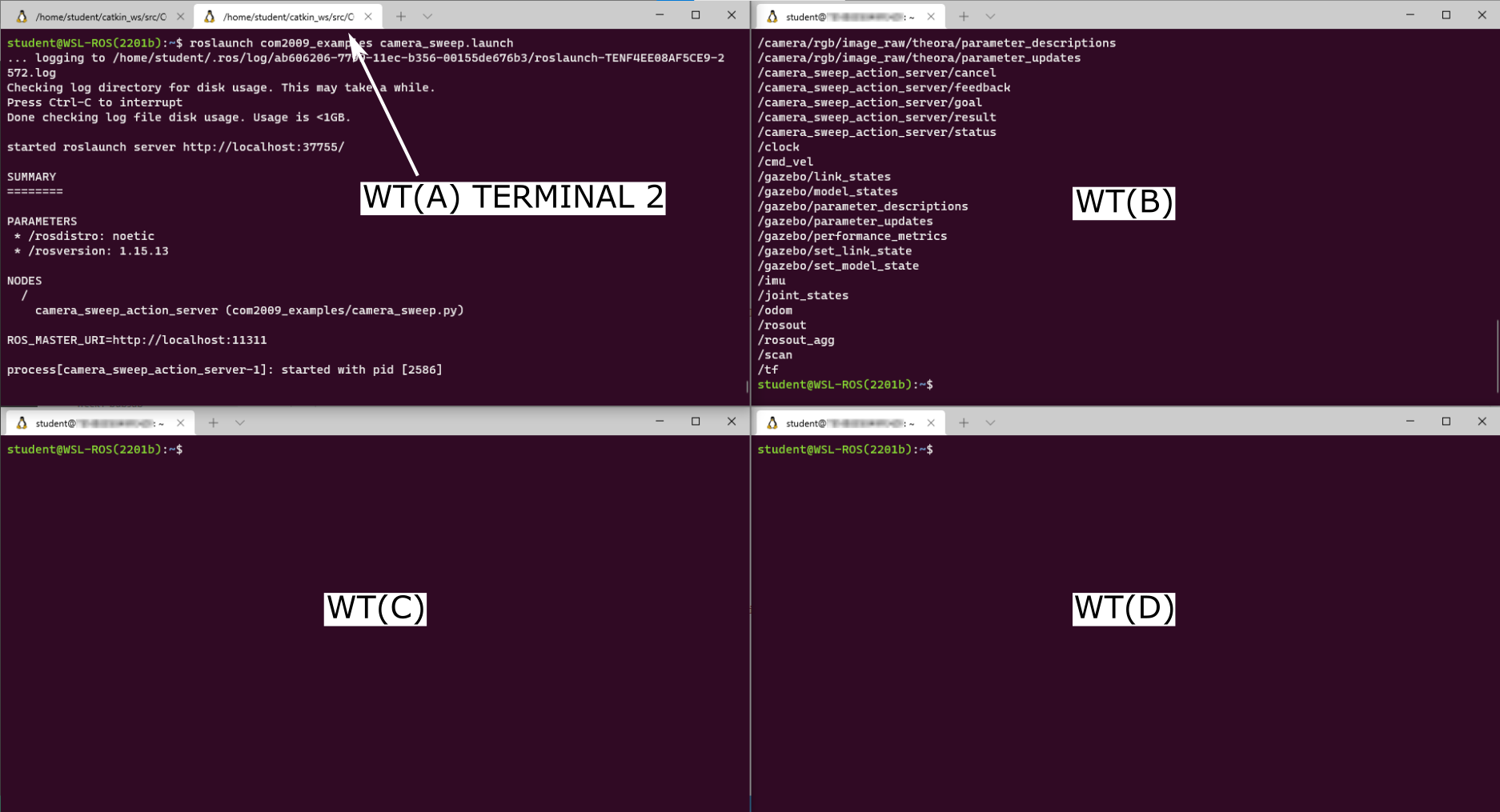

Return to the original Terminal instance (the one with the Gazebo processes running, and which we'll now refer to as WT(A)), open up a new tab (WT(A) TERMINAL 2) and launch an action server that we have already prepared for you for this exercise:

WT(A) TERMINAL 2:

-

Now, return to WT(B) and take a look again at all the topics that are active on the ROS network.

Questions

- What do you notice?

- Anything new there now compared to when you ran the same command before?

You should in fact notice 5 new items in that list:

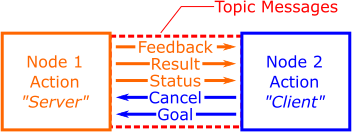

/camera_sweep_action_server/cancel /camera_sweep_action_server/feedback /camera_sweep_action_server/goal /camera_sweep_action_server/result /camera_sweep_action_server/statusA ROS action therefore has five messages associated with it. We'll talk about these in a bit more detail later on, but for now, all we need to know is that in order to call an action, we need to send the action server a Goal (which you may remember doing in Part 3).

Comparing with ROS Services

This is a bit like sending a Request to a ROS Service Server, like we did in the previous session.

-

ROS Actions use topic messages (unlike ROS Services, which use dedicated service messages). We can therefore tap into the ROS network and observe the messages being published to these in exactly the same way as we have done in previous parts of this course using

rostopic echo. In order to monitor some of these messages now, we'll launch a couple more instances of the Windows Terminal, so that we can view a few things simultaneously:- Once again, launch an additional Terminal instance. Again, Windows Terminal users can press the "New Tab" button whilst pressing the Shift key (this one will be called WT(C))

- Do this again to launch another Terminal instance, which we'll call WT(D)

- You should now have four Terminal instances open! Arrange these so that they are all visible, e.g.:

-

In WT(C) run a

rostopic echocommand to echo the messages being published to the/camera_sweep_action_server/feedbacktopic:

WT(C):

To begin with, you'll see the message:

Don't worry about this.

-

Do the same in WT(D), but this time to echo the messages being published to the

/resultpart of the action server message. -

Now, going back to WT(B), run the

rostopic pubcommand on the/camera_sweep_action_server/goaltopic, using the autocomplete functionality in the terminal to help you format the message correctly:

WT(B):

Which should provide you with the following:

-

Edit the

goalportion of the message by using the left arrow button on your keyboard to scroll back through the message. Modify thesweep_angleandimage_countparameters:sweep_angleis the angle (in degrees) that the robot will rotate on the spotimage_countis the number of images it will capture from its front-facing camera while it is rotating

- Once you have decided on some values, hit Enter to actually publish the message and call the action server. Keep an eye on all four terminal instances. What do you notice happening in each of them?

-

Now, in WT(B):

- Cancel the

rostopic pubcommand by entering Ctrl+C -

Once the action had completed, a message should have been published in WT(D) (a "result"), informing you of the filesystem location where the action server has stored the images that have just been captured by the robot:

-

Navigate to this directory in WT(B) (using

cd) and have a look at the content usingll(a handy alias for thelscommand):You should see the same number of image files in there as you requested with the

image_countparameter. -

Launch

eogin this directory and click through all the images to reveal your robot's mystery environment:

WT(B):

- Cancel the

-

Finally, open another tab in the WT(A) terminal instance (WT(A) TERMINAL 3) and launch the Gazebo client to view the simulation that has, until now, been running headless:

WT(A) TERMINAL 3:

-

The actual simulated environment should now be revealed!! To finish off, close down some active ROS processes and Windows Terminal instances that we've just been working with:

- Close down the

eogwindow and WT(B). - Stop the

rostopic echocommands that are running in WT(C) and WT(D) by entering Ctrl+C in each of them and then close each of these Terminal instances too. - Enter Ctrl+C in WT(A) TERMINAL 3 to stop the Gazebo GUI, but keep the terminal tab open.

- Leave the processes running in WT(A) TERMINAL 2 and 1 for now (the Action Server and the headless Gazebo processes).

- Close down the

Summary¶

Phew, that was a long one! Essentially, what we did here is launched an action server and then called it from the command-line using rostopic pub. Hopefully, while the action server was performing the task that we had requested, you also noticed that it was providing us with some real-time feedback on how it was getting on (in WT(C)). In the same way as a ROS Service, it should also have provided us with a result (in WT(D)), once the action had been completed. Feedback is one of the key features that differentiates a ROS Action from a ROS Service, but there are other interesting features too, and we'll explore these in more detail now.

What is a ROS Action?¶

As you will have observed from the above exercise, a ROS Action actually seems to work a lot like a ROS Service. We've seen that we have a feedback message associated with an Action though, which is indeed different, but this isn't the main differentiating feature. The key difference is that when a node calls a ROS Action (i.e. an action "Caller" or "Client"), it doesn't need to wait until the action is complete before it can move on to something else: it can continue to do other tasks at the same time. Unlike ROS Services then, ROS Actions are Asynchronous, which makes them useful when implementing robotic behaviours that take a longer time to execute, and which an Action Client might need to be updated on throughout the process.

Recall the five messages associated with the action server from the exercise above, the messages had the following names:

The top item there hints at the most important feature of ROS Actions: they can be cancelled (or "preempted"), which we'll learn more about later.

The other thing to note is that - where we used the rosservice command to interrogate the ROS Services that were active on our ROS network previously - Actions use ROS Topics, so we use rostopic commands to interrogate action servers:

rostopic list: to identify the action servers that are available on the network.rostopic echo: to view the messages being published by a given action server.rostopic pub: to call an action from the command-line.

The Format of Action Messages¶

Like Services, Action Messages have multiple parts to them, and we need to know what format these action messages take in order to be able to call them. We don't have a tool like rossrv to do this for Actions though, instead we have to use rosmsg, or look for the message definition inside the Action Message Package.

We ran rostopic list to identify our action server in the previous exercise, which told us that there was an action server running called /camera_sweep_action_server:

rostopic list

[some topics...]

/camera_sweep_action_server/cancel

/camera_sweep_action_server/feedback

/camera_sweep_action_server/goal

/camera_sweep_action_server/result

/camera_sweep_action_server/status

[some more topics...]

"Cancel" and "Status"¶

Every ROS Action has both a cancel and status message associated with them. These are standardised, so the format of these two messages will always be the same, regardless of the type of Action Server we use. We won't worry about these too much for now, but we'll make use of them in some ROS Nodes that we'll build in a short while.

The feedback, goal and result messages will be different for any given action server though, and so we need to know about the format of all of these before we attempt to make a call to the action server.

We can run rostopic info on any of these to find out more about them...

"Goal"¶

Let's look at the goal to start with:

TERMINAL 1:

From which we obtain the usual information:Type: tuos_msgs/CameraSweepActionGoal

Publishers: None

Subscribers:

* /camera_sweep_action_server (http://localhost:#####/)

The Type field tells us that the action message belongs to the tuos_msgs package, and we can find out more about the goal message by using rosmsg info. You'll be familiar with how this works by now:

Where {messageType} is established from the output of the rostopic info command above:

When working with ROS Actions and the rosmsg command though, we can actually drop the word "Action" in the message Type, so our rosmsg command becomes:

TERMINAL 1:

Which will output:Further Info

rosmsg info tuos_msgs/CameraSweepActionGoal will work as well, but we get a lot of other information in the output that we're not all that interested in. Give it a go and see the difference, if you want to!

In order to call this action server, we need to send a goal, and rosmsg info has just told us that there are two goal parameters that we must provide:

sweep_angle: a 32-bit floating-point valueimage_count: a 32-bit integer

So we know more about our Action Server's Goal now, but there are two other parameters we still know nothing about: Result and Feedback. It's important to know about all three things in order to be able to work with the Action Server effectively, and we can use an alternative approach to interrogate all three at the same time...

"Goal," "Feedback" and "Result"¶

We know, from above, that the /camera_sweep_action_server messages are part of the tuos_msgs package, so we can navigate to the package directory (using roscd) and look at the actual message definition.

TERMINAL 1:

Actions are always contained within an action folder inside the package directory, so we can then navigate into this folder using cd:

TERMINAL 1:

Use the ll command again here to view all the action messages within the package. Here you should see the CameraSweep.action message listed. Run cat on this file to view the full message definition:

TERMINAL 1:

#goal

float32 sweep_angle # the angular sweep over which to capture images (degrees)

int32 image_count # the number of images to capture during the sweep

---

#result

string image_path # The filesystem location of the captured images

---

#feedback

int32 current_image # the number of images taken

float32 current_angle # the current angular position of the robot (degrees)

Questions

- What are the names of the result and feedback message parameters? (There are three parameters in total.)

- What datatypes do these parameters use?

You'll learn how we use this information to develop Python Action Server & Client nodes in the following exercises.

Concurrent Activity¶

An Action Server provides feedback messages at regular intervals whilst performing an action and working towards its goal. This is one way that an Action Client can monitor the progress of the action that it has requested. Another way it can do this is by monitoring the status of an action. Both of these features enable concurrency, allowing an action client to work on other things whilst waiting for the requested behaviour to be completed by the action server.

Exercise 2: Building a Python Action Client Node with Concurrency¶

-

You should only have one Terminal application instance open now, with three terminal tabs in it. TERMINAL 3 should already be idle (i.e. not running any commands), and (if you haven't done so already) enter Ctrl+C in TERMINAL 1 and TERMINAL 2 to stop the headless Gazebo simulation processes and the Camera Sweep Action Server respectively.

-

In TERMINAL 1 create a new package called

part5_actionsusing thecatkin_create_pkgtool as you have done previously. This time, definerospy,actionlibandtuos_msgsas dependencies.Remember

Make sure you're in your

~/catkin_ws/src/folder when you run thecatkin_create_pkgcommand! -

Once again, run

catkin buildon this and then re-source your environment:

TERMINAL 1:

Then:

First:

-

Navigate to the

srcfolder of this package, create a file calledaction_client.py(usingtouch) and set this to be executable (usingchmod). -

Review the code provided here, and the annotations, then copy and paste the code into your newly created

action_client.pyfile. -

Then, in TERMINAL 2, execute the same launch file as before but this time with a couple of additional arguments:

TERMINAL 2:

... which will launch the Gazebo simulation in GUI mode this time, as well as the

/camera_sweep_action_servertoo.

-

In TERMINAL 1, use

rosrunto call the action server with theaction_client.pynode that you have just created...Something not right?

You may need to change the values that have been assigned to the goal parameters, in order for the client to successfully make a call to the server!

The node we have just created, in its current form, uses a feedback callback function to perform some operations while the action server is working. In this case, it simply prints the feedback data that is coming from the Action Server. That's it though, and the

client.wait_for_result()line still essentially just makes the client node wait until the action server has finished doing its job before it can do anything else. This still therefore looks a lot like a service, so let's modify this now to really build concurrency into the client node. -

First, create a copy of your

action_client.pynode and call itconcurrent_action_client.py(you will need to make sure you are still in thesrcdirectory of yourpart5_actionspackage before you run this command):

TERMINAL 1:

-

We want to use the status message from the action server now, and we can find out a bit more about this as follows:

- Use

rostopic info camera_sweep_action_server/statusto find the message type. - Then, use

rosmsg info(using the message type you have just identified) to tell you all the status codes that could be returned by the action server.

You should have identified the following states, listed in the

status_listportion of the message:We can set up our action client to monitor these status codes in a

whileloop, and then perform other operations inside this loop until the action has completed (or has been stopped for another reason). - Use

-

To do this, replace the

client.wait_for_result()line in theconcurrent_action_client.pyfile with the following code: -

Run the

concurrent_action_client.pynode and see what happens this time. Essentially, we know that we can carry on doing other things as long as the status code is less than 2 (eitherPENDINGorACTIVE), otherwise either our goal has been achieved, or something else has happened...

Cancelling (or Preempting) an Action¶

Actions are extremely useful for controlling robotic tasks or processes that might take a while to complete, but what if something goes wrong, or if we just change our mind and want to stop an action before the goal has been reached? The ability to preempt an action is one of the things that makes them so useful.

Exercise 3: Building a Preemptive Python Action Client Node¶

- In TERMINAL 1 you should still be located within the

srcfolder of yourpart5_actionspackage. If not, then go back there now! Create a new file calledpreemptive_action_client.pyand make this executable. -

Have a look at the code here, then copy and paste it into the

preemptive_action_client.pynode that you have just created.Here, we've built an action client that will cancel the call to the action server if we enter Ctrl+C into the terminal. This is useful, because otherwise the action server would continue to run, even when we terminate the client. A lot of the code is similar to the Action Client from the previous exercise, but we've built a class structure around this now for more flexibility. Have a look at the code annotations and make sure that you understand how it all works.

-

Run this using

rosrun, let the server take a couple of images and then enter Ctrl+C to observe the goal cancelling in action.Warning

You'll need to set some values for the goal parameters again!

-

We can also cancel a goal conditionally, which may also be useful if, say, too much time has elapsed since the call was made, or the caller has been made aware of something else that has happened in the meantime (perhaps we're running out of storage space on the robot and can't save any more images!) This is all achieved using the

cancel_goal()method.- Have a go now at introducing a conditional call to the

cancel_goal()method once a total of 5 images have been captured. - You could use the

captured_imagesattribute from theCameraSweepFeedbackmessage to trigger this.

- Have a go now at introducing a conditional call to the

A Summary of ROS Actions¶

ROS Actions work a lot like ROS Services, but they have the following key differences:

- They are asynchronous: a client can do other things while it waits for an action to complete.

- They can be cancelled (or preempted): If something is taking too long, or if something else has happened, then an Action Client can cancel an Action whenever it needs to.

- They provide feedback: so that a client can monitor what is happening and act accordingly (i.e. preempt an action, if necessary).

This mechanism is therefore useful for robotic operations that may take a long time to execute, or where intervention might be necessary.

Creating Action Servers in Python¶

Important

Cancel all active processes that you may have running before moving on.

So far we have looked at how to call an action server, but what about if we actually want to set up our own? We've been working with a pre-made action server in the previous exercises, but so far we haven't really considered how it actually works. First, let's do some detective work... We launched the Action Server using roslaunch in Exercise 1:

Questions

- What does this tell us about the package that the action server node belongs to?

- Where, in the package directory, is this node likely to be located?

- How might we find out the name of the Python node from the

camera_sweep.launchfile?

Once you've identified the name and the location of the source code, open it up in VS Code and have a look through it to see how it all works.

Don't worry too much about all the content associated with obtaining and manipulating camera images in there, we'll learn more about this in the next session. Instead, focus on the general overall structure of the code and the way that the action server is implemented.

-

As a starting point, consider the way in which the action server is initialised and the way a callback function is defined to encapsulate all the code that will be executed when the action is called:

-

Look at how a

/cmd_velpublisher and an/odomsubscriber are defined in external classes:These are imported (at the start of the code) from an external

tb3.pymodule that also lives in the same directory as the action server itself:We do this to simplify the process of obtaining odometry data and controlling the robot, whilst keeping the actual action server code itself more concise. Have a look at the

tb3.pymodule to discover exactly how these Python classes work. -

Look inside the action server callback function to see how the camera sweep operation is performed once the action has been called:

-

Consider the error checking that is performed on the

goalinput variables, and how the call to the action server is aborted should any of these goal requests be invalid: -

Consider how preemption is implemented in the server, and how the Action is stopped on receipt of a preempt request:

-

Also have a look at the way a

feedbackmessage is constructed and published by the server: -

Finally, consider how we tell the server that the action has been completed successfully, how the

resultmessage is published to the caller, and how we make the robot stop moving:

-

Exercise 4: Developing an "Obstacle Avoidance" behaviour using an Action Server¶

Knowing what you now do about ROS Actions, do you think the Service Server/Client systems that we developed in Part 4 were actually appropriate use cases for ROS Services? Probably not! In fact, Action Server/Client methods would have probably been more appropriate!

You are now going to construct your own Action Server and Client nodes to implement a more effective obstacle avoidance behaviour that could form the basis of an effective search strategy. For this, you're going to need to build your own Search Server and Client.

Step 1: Launch a simulation

There's a simulation environment that you can use as you're developing your action server/client nodes for this exercise. Launch the simulation in TERMINAL 1, with the following roslaunch command:

TERMINAL 1:

Step 2: Build the Action Server

-

In TERMINAL 2 navigate to the

srcfolder of yourpart5_actionspackage, create a Python script calledsearch_server.py, and make it executable. -

The job of the Action Server node is as follows:

- The action server should make the robot move forwards until it detects an obstacle up ahead.

- Similarly to the Service Server that you created last part, your Action Server here should be configured to accept two goal parameters:

- The speed (in m/s) at which the robot should move forwards when the action server is called. Consider doing some error checking on this to make sure a velocity request is less than the maximum speed that the robot can actually achieve (0.26 m/s)!

- The distance (in meters) at which the robot should stop ahead of any objects or boundary walls that are in front of it. To do this you'll need to subscribe to the

/scantopic. Be aware that an object won't necessarily be directly in front of the robot, so you may need to monitor a range ofLaserScandata points (within therangesarray) to make the collision avoidance effective (recall the LaserScan callback example and also have a look at theTb3LaserScanclass within thetuos_examples/tb3.pymodule that might help you with this).

-

Whilst your server performs its task it should provide the following feedback to the Action Caller:

-

The distance travelled (in meters) since the current action was initiated.

To do this you'll need to subscribe to the

/odomtopic. Remember that there's aTb3Odometryclass within thetuos_examples/tb3.pymodule that might help you with obtaining this data.Remember also that your robot's orientation shouldn't change over the course of a single action call, only its

linear.xandlinear.ypositions should vary. Bear in mind however that the robot won't necessarily be moving along theXorYaxis, so you will need to consider the total distance travelled in theX-Yplane. You should have done this in the Part 2move_squareexercise, so refer to this if you need a reminder.

-

-

Finally, on completion of the action, your server should provide the following three result parameters:

- The total distance travelled (in meters) over the course of the action.

- The distance to the obstacle that made the robot stop (this should match, or very close to, the distance that was provided by the Action Client in the goal).

- The angle (in degrees) at which this obstacle is located in front of the robot (

Tb3LaserScanclass within thetuos_examples/tb3.pymodule, which may already provide this).

-

An action message has been created for you to use for this exercise:

tuos_msgs/Search.action. Navigate to theactionfolder of thetuos_msgspackage directory (or userosmsg info ...in the terminal) to find out everything you need to know about this action message in order to develop your Action Server (and Client) nodes appropriately. -

We've put together some template code to help you with this. For further guidance though, you should also refer to the code for

/camera_sweep_action_servernode, which we talked about earlier: a lot of the techniques used by/camera_sweep_action_servernode will be similar to what you'll need to do in this exercise. -

Whenever you're ready you can launch your action server from TERMINAL 2, using

rosrun, as below:

TERMINAL 2:

Step 3: Build the Action Client

-

In TERMINAL 3 navigate to the

srcfolder of yourpart5_actionspackage, create a Python script calledsearch_client.py, and make it executable. -

The job of the Action Client node is as follows:

- The client needs to issue a correctly formatted goal to the server.

- The client should be programmed to monitor the feedback data from the Server. If it detects (from the feedback) that the robot has travelled a distance greater than 2 meters without detecting an obstacle, then it should cancel the current action call using the

cancel_goal()actionlibmethod.

-

Use the techniques that we used in the Client node from Exercise 3 as a guide to help you with this. There's also a code template here to help you get started.

-

Once you have everything in place launch the action client with

rosrunas below:

TERMINAL 3:

If all is good, then this client node should call the action server, which will - in turn - make the robot move forwards until it reaches a certain distance from an obstacle up ahead, at which point the robot will stop, and your client node will stop too. Once this happens, reorient your robot (using the

turtlebot3_teleopnode) and launch the client node again to make sure that it is robustly stopping in front of obstacles repeatedly, and when approaching them from a range of different angles.Important

Make sure that your preemption functionality works correctly too, so that the robot never moves any further than 2 meters during a given action call!

Some advanced exercises (if you're feeling adventurous!)¶

Want to do more with the ROS skills that you have now developed?! Consider the following advanced exercises that you could try out now that you know how to use ROS Actions!

Note

We've covered a lot already in this session, and the next exercises are really just suggestions for more advanced things that you may want to explore to push your knowledge further (it may also help with the further work that you will do in Assignment #2...)

Advanced Exercise 1: Implementing a Search strategy¶

What you developed in the previous exercise could be used as the basis for an effective robot search strategy. Up to now, your Action Client node should have the capability to call your Search.action server to make the robot move forwards by 2 meters, or until it reaches an obstacle (whichever occurs first), but you could enhance this further:

- Between action calls, your client node could make the robot turn on the spot to face a different direction and then issue a further action call to make the robot move forwards once again.

- The turning process could be done at random, or it could be informed by the result of the last action call, i.e.: if (on completion) the server has informed the client that it detected an object at an angle of, say, 10° anti-clockwise from the front of the robot, then the client might then decide to turn the robot clockwise in an attempt to turn away from the object before issuing its next action call to make the robot move forwards again.

-

By programming your client node to repeat this process over and over again, the robot would (somewhat randomly) travel around its environment safely, stopping before it crashes into any obstacles and reorienting itself every time it stops moving forwards. This is effectively an implementation of a basic robotic search strategy!

Enhancing this further...

Imagine SLAM was running at the same time too... your robot could be building up a map of its environment in the background as it slowly explored every part of it!

Assignment #2 Checkpoint

Having completed Assignment #1 up to this point, you should have everything you need to tackle Assignment #2 Task 2.

Advanced Exercise 2: Autonomous Navigation using waypoint markers¶

In Part 3 you used SLAM to construct a map of an environment (Exercise 2) and then issued navigation requests to the move_base action server, via the command-line, (Exercise 3) to make your robot move to a zone marker, based on coordinates that you had established beforehand. Now that you know how to build Action Client Nodes in Python you could return to your part2_navigation package and build a new node that makes the robot move sequentially between each zone marker programmatically.

- Your node could cycle through the coordinates of all four of the zone markers (or "waypoints") that you established whilst using SLAM to build a map of the environment (as per Exercise 2).

- Your node could monitor the status of the

move_base_simpleaction call to know when the robot has reached a zone marker, so that it knows when to issue a further action call to move on to the next one. - You could refer to the launch file that you created in Part 3 to launch all the navigation processes that need to be running in order to enable and configure the ROS Navigation Stack appropriately for the TurtleBot3 robot.

Wrapping Up¶

In Part 5 of this course you've learnt:

- How ROS Actions work and why they might be useful.

- How to develop Action Client Nodes in Python which can perform other tasks concurrently to the action they have requested, and which can also cancel the requested action, if required.

- How to use standard ROS tools to interrogate the topic messages used by an action server, allowing you to build clients to call them, and to also allow you to build standalone action servers yourself using bespoke Action messages.

- How to harness this communication method to implement a behaviour that could be used as the basis for a genuine robotic search strategy.

Topics, Services or Actions: Which to Choose?¶

You should now have developed a good understanding of the three communication methods that are available within ROS to facilitate communication between ROS Nodes:

- Topic-based messaging.

- ROS Services.

- ROS Actions.

Through this course you've gained some practical experience using all three of these, but you may still be wondering how to select the appropriate one for a certain robot task...

This ROS.org webpage summarises all of this very nicely (and briefly), so you should have a read through this to make sure you know what's what. In summary though:

- Topics: Are most appropriate for broadcasting continuous data-streams such as sensor data and robot state information, and for publishing data that is likely to be required by a range of Nodes across a ROS network.

- Services: Are most appropriate for very short procedures like quick calculations (inverse kinematics etc.) and performing short discrete actions that are unlikely to go wrong or will not need intervention (e.g. turning on a warning LED when a battery is low).

- Actions: Are most appropriate for longer running tasks (like moving a robot), for longer processing calculations (processing the data from a camera stream) or for operations where we might need to change our mind and do something different or cancel an invoked behaviour part way through.

WSL-ROS Managed Desktop Users: Save your work!¶

Remember, to save the work you have done in WSL-ROS during this session so that you can restore it on a different machine at a later date. Run the following script in any idle WSL-ROS Terminal Instance now:

You'll then be able to restore it to a fresh WSL-ROS environment next time you fire one up (wsl_ros restore).

-

Remember: you can also use the

wsl_ros restorecommand at any time. ↩